When we invented Private Browsing back in 2005, our aim was to provide users with an easy way to keep their browsing private from anyone who shared the same device. We created a mode where users do not leave any local, persistent traces of their browsing. Eventually all other browsers shipped the same feature. At times, this is called “ephemeral browsing.”

We baked in cross-site tracking prevention in all Safari browsing through our cookie policy, starting with Safari 1.0 in 2003. And we’ve increased privacy protections incrementally over the last 20 years. (Learn more by reading Tracking Prevention in Webkit.) Other popular browsers have not been as quick to follow our lead in tracking prevention but there is progress.

Apple believes that users should not be tracked across the web without their knowledge or their consent. Entering Private Browsing is a strong signal that the user wants the best possible protection against privacy invasions, while still being able to enjoy and utilize the web. Staying with the 2005 definition of private mode as only being ephemeral, such as Chrome’s Incognito Mode, simply doesn’t cut it anymore. Users expect and deserve more.

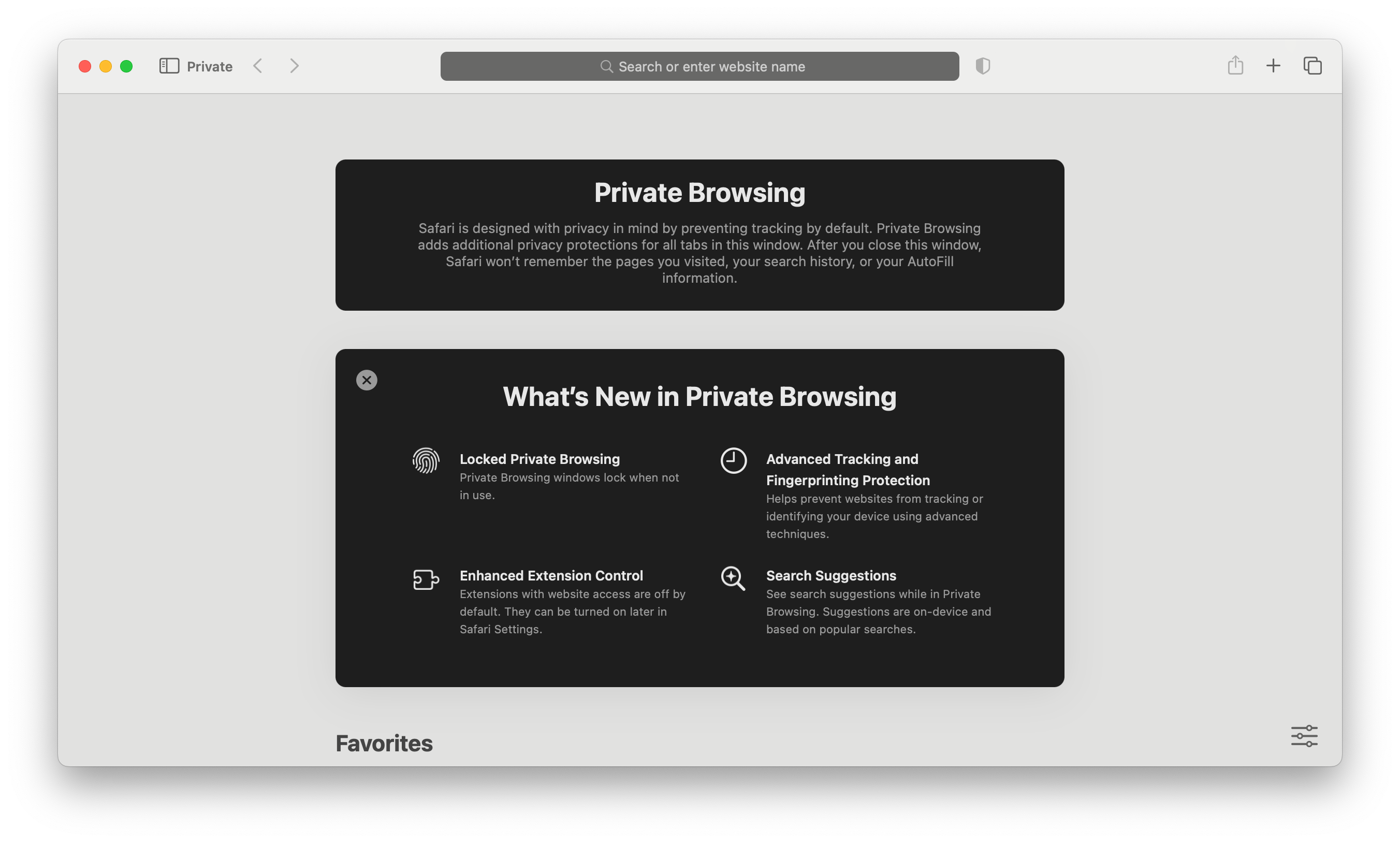

So, we decided to take Private Browsing further and add even more protection beyond the normal Safari experience. Last September, we added a whole new level of privacy protections to Private Browsing in Safari 17.0. And we enhanced it even further in Safari 17.2 and Safari 17.5. Plus, when a user enables them, all of the new safeguards are available in regular Safari browsing too.

With this work we’ve enhanced web privacy immensely and hope to set a new industry standard for what Private Browsing should be.

Enhanced Private Browsing in a Nutshell

These are the protections and defenses added to Private Browsing in Safari 17.0:

- Link Tracking Protection

- Blocking network loads of known trackers, including CNAME-cloaked known trackers

- Advanced Fingerprinting Protection

- Extensions with website or history access are off by default

In addition, we added these protections and defenses in all browsing modes:

- Capped lifetime of cookies set in responses from cloaked third-party IP addresses

- Partitioned SessionStorage

- Partitioned blob URLs (starting in Safari 17.2)

We also expanded Web AdAttributionKit (formerly Private Click Measurement) as a replacement for tracking parameters in URL to help developers understand the performance of their marketing campaigns even under Private Browsing.

However, before we dive into these new and enhanced privacy protections, let’s first consider an important aspect of these changes: website compatibility risk.

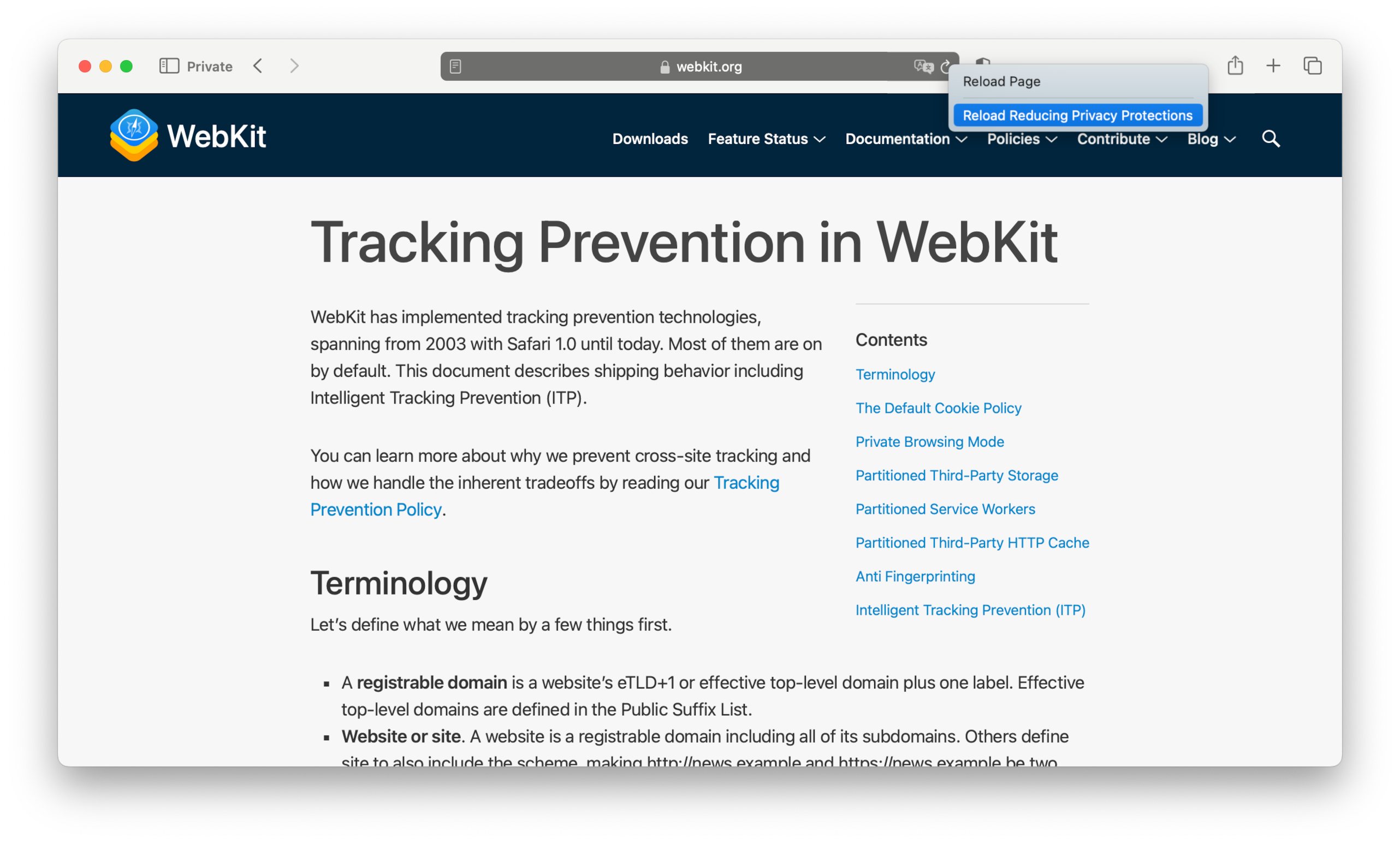

The Risk of Breaking Websites and How We Mitigate It

There are many ideas for how to protect privacy on the web, but unfortunately many of them may break the user’s experience. Like security protections in real life, a balance must be struck. The new Private Browsing goes right up to the line, attempting to never break websites. But of course there is a risk that some parts of some sites won’t work. To solve this, we give users affordances to reduce privacy protections on a per-site basis. Such a change in privacy protections is only remembered while browsing within a site. This option is a last resort when a web page is not usable due to the privacy protections.

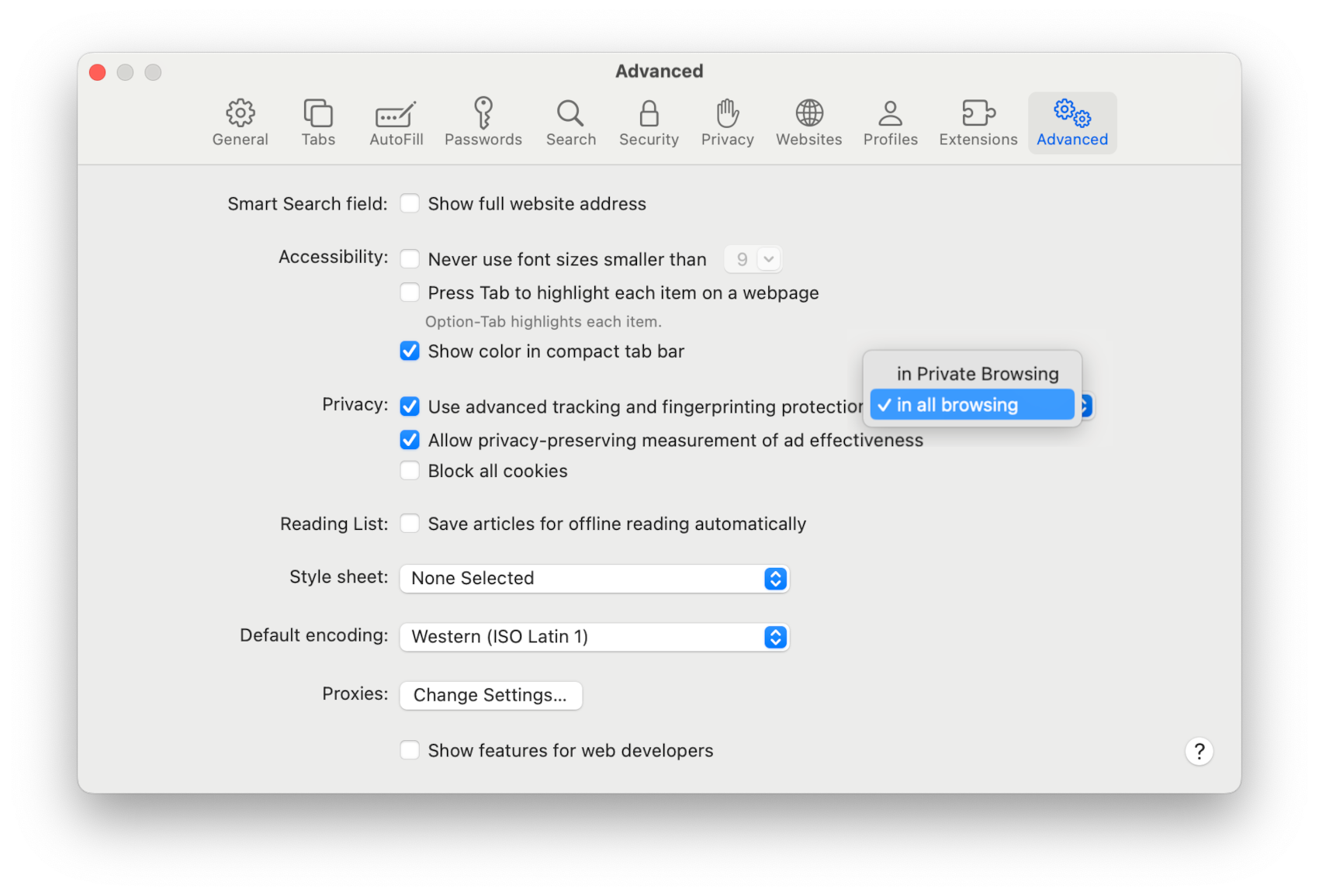

All of the new privacy protections in Private Browsing are also available in regular browsing. On iOS, iPadOS and visionOS go to Settings > Apps > Safari > Advanced > Advanced Tracking and Fingerprinting Protection and enable “All Browsing”. On macOS go to Safari > Settings > Advanced and enable “Use advanced tracking and fingerprinting protection”:

Let’s now walk through how these enhancements work.

Link Tracking Protection

Safari’s Private Browsing implements two new protections against tracking information in the destination URL when the user navigates between different websites. The specific parts of the URL covered are query parameters and the fragment. The goal of these protections is to make it more difficult for third-party scripts running on the destination site to correlate user activity across websites by reading the URL.

Let’s consider an example where the user clicks a link on clickSource.example, which takes them to clickDestination.example. The URL looks like this:

https://clickDestination.example/article?known_tracking_param=123&campaign=abc&click_val=456

Safari removes a subset of query parameters that have been identified as being used for pervasive cross-site tracking granular to users or clicks. This is done prior to navigation, such that these values are never propagated over the network. If known_tracking_param above represents such a query parameter, the URL that’s used for navigation will be:

https://clickDestination.example/article?campaign=abc&click_val=456

As its name suggests, the campaign above represents a parameter that’s only used for campaign attribution, as opposed to click or user-level tracking. Safari allows such parameters to pass through.

Finally, on the destination site after a cross-site navigation, all third-party scripts that attempt to read the full URL (e.g. using location.search, location.href, or document.URL) will get a version of the URL that has no query parameters or fragment. In our example, this script-exposed value is simply:

https://clickDestination.example/article

In a similar vein, Safari also hides cross-site any document.referrer from script access in Private Browsing.

Web AdAttributionKit in Private Browsing

Web AdAttributionKit (formerly Private Click Measurement) is a way for advertisers, websites, and apps to implement ad attribution and click measurement in a privacy-preserving way. You can read more about it here. Alongside the new suite of enhanced privacy protections in Private Browsing, Safari also brings a version of Web AdAttributionKit to Private Browsing. This allows click measurement and attribution to continue working in a privacy-preserving manner.

Web AdAttributionKit in Private Browsing works the same way as it does in normal browsing, but with some limits:

- Attribution is scoped to individual Private Browsing tabs, and transfers attribution across new tabs opened when clicking on links. However, attribution is not preserved through other indirect means of navigation: for instance, copying a link and pasting in a new tab. In effect, this behaves similarly to how Web AdAttributionKit works for Direct Response Advertising.

- Since Private Browsing doesn’t persist any data, pending attribution requests are discarded when the tab is closed.

Blocking Network Loads of Known Trackers

Safari 17.0 also comes with an automatically enabled content blocker in Private Browsing, which blocks network loads to known trackers. While Intelligent Tracking Prevention has long blocked all third party cookies, blocking trackers’ network requests from leaving the user’s device in the first place ensures that no personal information or tracking parameters are exfiltrated through the URL itself.

This automatically enabled content blocker is compiled using data from DuckDuckGo and from the EasyPrivacy filtering rules from EasyList. The requests flagged by this content blocker are only entries that are flagged as trackers by both DuckDuckGo and EasyPrivacy. In doing so, Safari intentionally allows most ads to continue loading even in Private Browsing.

Private Browsing also blocks cloaked network requests to known tracking domains. They otherwise have the ability to save third party cookies in a first-party context. This protection requires macOS Sonoma or iOS 17. By cloaked we mean subdomains mapped to a third-party server via CNAME cloaking or third-party IP address cloaking. See also the “Defending Against Cloaked First Party IP Addresses” section below.

When Safari blocks a network request to a known tracker, a console message of this form is logged, and can be viewed using Web Inspector:

`Blocked connection to known tracker: tracker.example`

Network Privacy Enhancements

Safari 15.0 started hiding IP addresses from known trackers by default. Private Browsing in Safari 17.0 adds the following protections for all users:

- Encrypted DNS. DNS queries are used to resolve server hostnames into IP addresses, which is a necessary function of accessing the internet. However, DNS is traditionally unencrypted, and allows network operators to track user activity or redirect users to other servers. Private Browsing uses Oblivious DNS over HTTPS by default, which encrypts and proxies DNS queries to protect the privacy and integrity of these lookups.

- Proxying unencrypted HTTP. Any unencrypted HTTP resources loaded in Private Browsing will use the same multi-hop proxy network used to hide IP addresses from trackers. This ensures that attackers in the local network cannot see or modify the content of Private Browsing traffic.

Additionally, for iCloud+ subscribers who have iCloud Private Relay turned on, Private Browsing takes privacy to the next level with these enhancements:

- Separate sessions per tab. Every tab that the user opens in Private Browsing now uses a separate session to the iCloud Private Relay proxies. This means that web servers won’t be able to tell if two tabs originated on the same device. Each session is assigned egress IP addresses independently. Note that this doesn’t apply to parent-child windows that need a programmatic relationship, such as popups and their openers.

- Geolocation privacy by default. Private Browsing uses an IP location based on your country and time zone, not a more specific location.

- Warnings before revealing IP address. When accessing a server that is not accessible on the public internet, such as a local network server or an internal corporate server, Safari cannot use iCloud Private Relay. In Private Browsing, Safari now displays a warning requesting that the user consents to revealing their IP address to the server before loading the page.

Extensions in Private Browsing

Safari 17.0 also boosts the privacy of Extensions in Private Browsing. Extensions that can access website data and browsing history are now off by default in Private Browsing. Users can still choose to allow an extension to run in Private Browsing and gain all of the extension’s utility. Extensions that don’t access webpage contents or browsing history, like Content Blockers, are turned on by default in Private Browsing when turned on in Safari.

Advanced Fingerprinting Protection

With Safari and subsequently other browsers restricting stateful tracking (e.g. cross-site cookies), many trackers have turned to stateless tracking, often referred to as fingerprinting.

Types of Fingerprinting

We distinguish these types of fingerprinting:

- Device fingerprinting. This is about building a fingerprint based on device characteristics, including hardware and the current operating system and browser. It can also include connected peripherals if they are allowed to be detected. Such a fingerprint cannot be changed by the user through settings or web extensions.

- Network and geographic position fingerprinting. This is about building a fingerprint based on how the device connects to the Internet and any means of detecting its geographic position. It could be done by measuring roundtrip speeds of network requests or simply using the IP address as an identifier.

- User settings fingerprinting. This is about reading the state of user settings such as dark/light mode, locale, font size adjustments, and window size on platforms where the user can change it. It also includes detecting web extensions and accessibility tools. We find this kind of fingerprinting to be extra hurtful since it exploits how users customize their web experience to fit their needs.

- User behavior fingerprinting. This is about detecting recurring patterns in how the user behaves. It could be how the mouse pointer is used, how quickly they type in form fields, or how they scroll.

- User traits fingerprinting. This is about figuring out things about the user, such as their interests, age, health status, financial status, and educational background. Those gleaned traits can contribute to a unique ID but also can be used directly to target them with certain content, adjust prices, or tailor messages.

Fingerprint Stability

A challenge for any tracker trying to create a fingerprint is how stable the fingerprint will be over time. Software version fingerprinting changes with software updates, web extension fingerprinting changes with extension updates and enablement/disablement, user settings change when the user wants, multiple users of the same device means behavior fingerprints change, and roaming devices may change network and geographic position a lot.

Fingerprinting Privacy Problem 1: Cross-Site Tracking

Fingerprints can be used to track the user across websites. If successful, it defeats tracking preventions such as storage partitioning and link decoration filtering.

There are two types of solutions to this problem:

- Make the fingerprint be shared among many users, so called herd immunity.

- Make the fingerprint unique per website, typically achieved via randomized noise injection.

Fingerprinting Privacy Problem 2: Per-Site User Recall

Less talked about is the fingerprinting problem of per-site user recall. Web browsers offer at least two ways for the user to reset their relationship with a website: Clear website data or use Private Browsing. Both make a subsequent navigation to a website start out fresh.

But fingerprinting defeats this and allows a website to remember the user even though they’ve opted to clear website data or use Private Browsing.

There are two types of solutions to this problem:

- Make the fingerprint be shared among many users, so called herd immunity.

- Make the fingerprint unique per website, and generate a new unique fingerprint for every fresh start.

Fingerprinting Privacy Problem 3: Per-Site Visitor Uniqueness

The ultimate anti fingerprinting challenge in our view is to address a specific user’s uniqueness when visiting a specific website. Here’s a simple example:

Having the locale setting to US/EN for American English may provide ample herd immunity in many cases. But what happens when a user with that setting visits an Icelandic government website or a Korean reading club website? They may find themselves in a very small “herd” on that particular website and combined with just a few more fingerprinting touch points they can be uniquely identified.

Addressing per-site visitor uniqueness is not possible in general by a browser unless it knows what the spread of visitors looks like for individual websites.

Fingerprinting Protections at a High Level

We view cross-site tracking and per-site user recall as privacy problems to be addressed by browsers.

Our approach:

Make the fingerprint unique per website, and generate a new unique fingerprint for every fresh start such as at website data removal.

Our tools:

- Use multi-hop proxies to hide IP addresses and defend against network and geographic position fingerprinting.

- Limit the number of fingerprintable web APIs whenever possible. This could mean altering the APIs, gating them behind user permissions, or not implementing them.

- Inject small amounts of noise in return values of fingerprintable web APIs.

Fingerprinting Protection Details

Safari’s new advanced fingerprinting protections make it difficult for scripts to reliably extract high-entropy data through the use of several web APIs:

- To make it more difficult to reliably extract details about the user’s configuration, Safari injects noise into various APIs: namely, during 2D canvas and WebGL readback, and when reading

AudioBuffersamples using WebAudio. - To reduce the overall entropy exposed through other APIs, Safari also overrides the results of certain web APIs related to window or screen metrics to fixed values, such that fingerprinting scripts that call into these APIs for users with different screen or window configurations will get the same results, even if the users’ underlying configurations are different.

2D Canvas and WebGL

Many modern web browsers use a computer’s graphics processing unit (GPU) to accelerate rendering graphics. The Web’s Canvas API (2D Canvas) and WebGL API give a web page the tools it needs for rendering arbitrary images and complex scenes using the GPU, and analyzing the result. These APIs are valuable for the web platform, but they allow the web page to learn unique details about the underlying hardware without asking for consent. With Safari’s advanced fingerprinting protections enabled, Safari applies tiny amounts of noise to pixels on the canvas that have been painted using drawing commands. These modifications reduce the value of a fingerprint when using these APIs without significantly impacting the rendered graphics.

It’s important to emphasize that:

- This noise injection only happens in regions of the canvas where drawing occurs.

- The amount of noise injected is extremely small, and (mostly) should not result in observable differences or artifacts.

This strategy helps mitigate many of the compatibility issues that arise from this kind of noise injection, while still maintaining robust fingerprinting mitigations.

In Safari 17.5, we’ve bolstered these protections by additionally injecting noise when reading back data from offscreen canvas in both service workers and shared workers.

Web Audio

Similarly, when reading samples using the WebAudio API — via AudioBuffer.getChannelData() — a tiny amount of noise is applied to each sample to make it very difficult to reliably measure OS differences. In practice, these differences are already extremely minor. Typically due to slight differences in the order of operations when applying FFT or IFFT. As such, a relatively low amount of noise can make it substantially more difficult to obtain a stable fingerprint.

In Safari 17.5, we made audio noise injection more robust in the following ways:

- The injected noise now applies consistently to the same values in a given audio buffer — this means a looping

AudioSourceNodethat contains a single high-entropy sample can’t be used to average out the injected noise and obtain the original value quickly. - Instead of using a uniform distribution for the injected noise, we now use normally-distributed noise. The mean of this distribution converges much more slowly on the original value, when compared to the average of the minimum and maximum value in the case of uniformly-distributed noise.

- Rather than using a low, fixed amount of noise (0.1%), we’ve refactored the noise injection mechanism to support arbitrary levels of noise injection. This allows us to easily fine-tune noise injection, such that the magnitude of noise increases when using audio nodes that are known to reveal subtle OS or hardware differences through minute differences in sample values.

This noise injection also activates when using Audio Worklets (e.g. AudioWorkletNode) to read back audio samples.

Screen/Window Metrics

Lastly, for various web APIs that currently directly expose window and screen-related metrics, Safari takes a different approach: instead of the noise-injection-based mitigations described above, entropy is reduced by fixing the results to either hard-coded values, or values that match other APIs.

screen.width/screen.height: The screen size is fixed to the values ofinnerWidthandinnerHeight.screenX/screenY: The screen position is fixed to(0, 0).outerWidth/outerHeight: Like screen size, these values are fixed toinnerWidthandinnerHeight.

These mitigations also apply when using media queries to indirectly observe the screen size.

Don’t Add Fingerprintable APIs to the Web, Like The Topics API

We have worked for many years with the standards community on improving user privacy of the web platform. There are existing web APIs that are fingerprintable, such as Canvas, and reining in their fingerprintability is a long journey. Especially since we want to ensure existing websites can continue to work well.

It is key for the future privacy of the web to not compound the fingerprinting problem with new, fingerprintable APIs. There are cases where the tradeoff tells us that a rich web experience or enhanced accessibility motivates some level of fingerprintability. But in general, our position is that we should progress the web without increasing fingerprintability.

A recent example where we opposed a new proposal is the Topics API which is now shipping in the Chrome browser. We provided extensive critical feedback as part of the standards process and we’d like to highlight a few pieces here.

The Topics API in a Nutshell

From the proposal:

// document.browsingTopics() returns an array of up to three topic objects in random order.

const topics = await document.browsingTopics();

Any JavaScript can call this function on a webpage. Yes, that includes tracker scripts, advertising scripts, and data broker scripts.

The topics come from a predefined list of hundreds of topics. It’s not the user who picks from these topics, but instead Chrome will record the user’s browsing history over time and deduce interests from it. The user doesn’t get told upfront which topics Chrome has tagged them with or which topics it exposes to which parties. It all happens in the background and by default.

The intent of the API is to help advertisers target users with ads based on each user’s interests even though the current website does not necessarily imply that they have those interests.

The Fingerprinting Problem With the Topics API

A new research paper by Yohan Beugin and Patrick McDaniel from University of Wisconsin-Madison goes into detail on Chrome’s actual implementation of the Topics API.

The authors use large scale real user browsing data (voluntarily donated) to show both how the 5% noise supposed to provide plausible deniability for users can be defeated, and how the Topics API can be used to fingerprint and re-identify users.

“We conclude that an important part of the users from this real dataset are re-identified across websites through only the observations of their topics of interest in our experiment. Thus, the real users from our dataset can be fingerprinted through the Topics API. Moreover, as can be seen, the information leakage and so, privacy violation worsen over time as more users are uniquely re-identified.” —Beugin and McDaniel, University of Wisconsin-Madison

The paper was published at the 2024 IEEE Security and Privacy Workshops (SPW) in May.

Further Privacy Problems With the Topics API

Re-identifying and tracking users is not the only privacy problem with the Topics API. There is also the profiling of users’ cross-site activity. Here’s an example using topics on Chrome’s predefined list.

Imagine in May 2024 you go to news.example where you are a subscriber and have provided your email address. Embedded on the website, dataBroker.example. The data broker has gleaned your email address from the login form and calls the Topics API to learn that you currently have these interests:

- Flowers

- Event & Studio Photography

- Luxury Travel

In May 2026 you go to news.example where dataBroker.example calls the Topics API and is told that you now have these interests:

- Children’s Clothing

- Family Travel

- Toys

Finally, in May 2029 you go to news.example where dataBroker.example calls the Topics API and is told that you have these interests:

- Legal Services

- Furnished Rentals

- Child Care

You haven’t told any website with access to your email address anything that’s been going on in your family life. But the data broker has been able to read your shifting interests and store them in their permanent profile of you — while you were reading the news.

Now imagine what advanced machine learning and artificial intelligence can deduce about you based on various combinations of interest signals. What patterns will emerge when data brokers and trackers can compare and contrast across large portions of the population? Remember that they can combine the output of the Topics API with any other data points they have available, and it’s the analysis of all of it together that feeds the algorithms that try to draw conclusions about you.

We think the web should not expose such information across websites and we don’t think the browser, i.e. the user agent, should facilitate any such data collection or use.

Privacy Enhancements in Both Browsing Modes

Our defenses against cloaked third-party IP addresses and our partitioning of SessionStorage and blob URLs are enabled by default in both regular browsing and Private Browsing. Here’s how those protections work.

Defending Against Cloaked First Party IP Addresses

In 2020, Intelligent Tracking Prevention (ITP) gained the ability to cap the expiry of cookies set in third-party CNAME-cloaked HTTP responses to 7 days.

This defense did not mitigate cases where IP aliasing is used to cloak third party requests under first party subdomains. ITP now also applies a 7-day cap to the expiry of cookies in responses from cloaked third-party IP addresses. Detection of third-party IP addresses is heuristic, and may change in the future. Currently, two IP addresses are considered different parties if any of the following criteria are met:

- One IP address is IPv4, while the other is IPv6.

- If both addresses are IPv4, the length of the common subnet mask is less than 16 bits (half of the full address length).

- If both addresses are IPv6, the length of the common subnet mask is less than 64 bits (also half of the full address length).

Partitioned SessionStorage and Blob URLs

Websites have many options for how they store information over longer time periods. Session Storage is a storage area in Safari that is scoped to the current tab. When a tab in Safari is closed, all of the session storage associated with it is destroyed. Beginning in Safari 16.1 cross-site Session Storage is partitioned by first-party web site.

Similarly, Blobs are a storage type that allow websites to store raw, file-like data in the browser. A blob can hold almost anything, from simple text to something larger and more complex like a video file. A unique URL can be created for a blob, and that URL can be used to gain access to the associated blob, as long as the blob still exists. These URLs are often referred to as Blob URLs, and a Blob URL’s lifetime is scoped to the document that creates it. Beginning in Safari 17.2, cross-site Blob URLs are partitioned by first-party web site, and first-party Blob URLs are not usable by third parties.

Setting a New Industry Standard

The additional privacy protections of Private Browsing in Safari 17.0, Safari 17.2 and Safari 17.5 set a new bar for user protection. We’re excited for all Safari users and the web itself to benefit from this work!

Feedback

We love hearing from you! To share your thoughts on Private Browsing 2.0, find John Wilander on Mastodon at @wilander@mastodon.social or send a reply on X to @webkit. You can also follow WebKit on LinkedIn. If you run into any issues, we welcome your feedback on Safari UI (learn more about filing Feedback), or your WebKit bug report about web technologies or Web Inspector.